Andy Brown, Toph Whitmore, Misha Kuperman

Reissued from 2016 article for the 20th anniversary of 9/11

Follow up to 9/18 — The First Trade @ML

Twenty years ago, I discovered what was then an unacknowledged truth: The internet was more resilient than a corporate network could ever be.

I was the CTO for Merrill Lynch’s Direct Markets team in New York City. After enduring the horror of 9/11, my technical colleagues and I were faced with an implacable, practical reality: Corporate network fiber — owned and managed by large network providers — had run under and through the World Trade Center complex. The now-severed fiber network meant that we would have to reconnect our own corporate networks, either via a service provider, or by some other means, both to NJ where our recovery efforts were underway and to the rest of the world that depended on the services that ran in the tri-state region.

We looked at our options, considering we only had a few days to get the business running again and didn’t have time to re-build a global network with traditional means. We realized that if we were to ensure the integrity of our network connectivity, we couldn’t rely on the “diversity” of fiber access provided via a third party. One of my colleagues brought in a large drill, and drilled a hole in the wall from 570 Washington Street into UUnet in the building next door, and ran fiber through the hole to patch us directly to the internet at GigE speed. We then established a global network overlay, similar to current SD-WANs, via GRE tunneling to connect Merrill Lynch’s Tokyo, London, Singapore, Sydney, and New York offices. We ran this VPN-over-internet setup as our own managed service, using the Internet as the primary fabric for connecting employees and partners to each other and to applications for about 90 days after 9/11.

I then found myself in November 2001 in a new job as head of Global Network Services (GNS) for Merrill Lynch. I was determined to learn the lessons of what worked and what didn’t during the recovery and implement them across our global infrastructure… We proceeded to build out a global internet overlay to replace ISDN backup circuits to our then restored fiber connections, an architecture which eventually was deployed into every financial advisor branch in the world. We also used the technique of a network “cloud” to build a virtualized B2B network with BT Radianz to replace point-to-point third party connectivity for the trading floors, and built out the same virtual voice cloud for trader voice.

Relying on internet exchange routing optimization and VPNs over GRE was an unorthodox approach, and a prelude to SD-WAN. We wouldn’t have been back in business 6 days after 9/11 without it… With the rollout of our internet overlay, connection speed, data traffic speed, and employee productivity went through the roof. We started to win over clients: Our availability and provisioning speed during a time of great service uncertainty gave Merrill Lynch a competitive advantage. My favorite quote from that period was from a rep office in South America, where the branch manager who I had never met called me at 6pm New York time one evening and said, “Andy, we are dancing on our desks”, I asked him why and he explained that his team had to stay in the office until all post trade activity had been completed which involved the upload to New York and post processing of that data. He was able to go home at 6pm and have dinner with his wife and kids instead of midnight because of a 10MB internet VPN vs a 128k MPLS solution, at one tenth the price btw as well.

So what has happened since…

Fast forward twenty years. A number of forward thinking enterprises have adopted a similar approach, and employ the internet as their network backbone, with proxy-based security and optimal data-traffic routing.

For the rest, we find ourselves in a world of enterprises that stubbornly rely on a half-century-old network design that’s costly, inefficient, and worse, impossible to secure. More interestingly, the reason behind this phenomenon is an irrelevant SLA that protects a path to nowhere. The applications and users have all but moved away from the end points of the path into the cloud or their homes and remote offices respectively. The “hub-and-spoke” network was designed to connect on-premises work, but today everyone works on the internet and in the “cloud” and during COVID and now with “Hybrid Work”, not even at an office. As recent cyber attacks have shown, legacy infrastructure is more than just a corporate burden, it’s a risk to business continuity and survival.

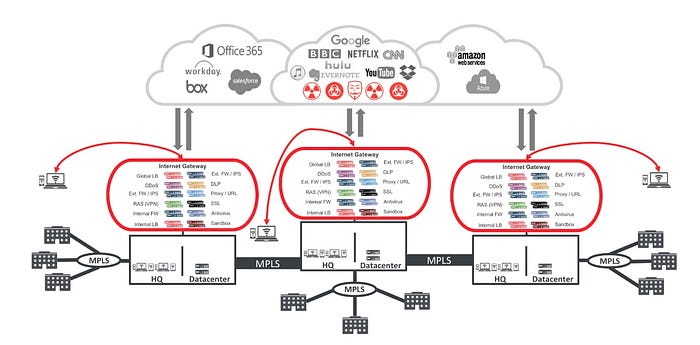

In these antiquated environments, enterprises own and build out their own MPLS networks. Security is delivered via hardware appliances stacked at the gateways to the internet, forcing data traffic to be backhauled for security-processing, adding latency and affecting productivity and unless artificially constrained, not capturing a large portion of the critical workloads.

Enterprises backhaul traffic from branch locations to a DMZ with internet ingress and egress points where security policies (e.g., IDS/IPS, web filters, APT, many more) are applied to the traffic before it is transmitted to the internet. Those gateways were intended to be efficient, secure portals to the internet, but they’re not. Data traffic volumes are different now and business critical apps are no longer just ERP, Email and enterprise hosted applications : Backhaul architectures become exceedingly stressed when users are doing all their work outside the perimeter boundary, like say in Office 365 or Salesforce.

Let’s take a look at that backhauling performance hit.

In Figure 1 above, branches connect from left to right, from regional office to HQ/datacenter, over MPLS networks, with internet ingress/egress via gateways housed in the datacenter. As an example, the data traffic of an enterprise Salesforce user sitting in San Francisco would be carried over the network via VPN to the New York datacenter, before then being transmitted through the internet gateway all the way back to California to Salesforce.com. We can assume 90 millisecond (ms) latency from San Francisco to New York and another 90ms latency back, just because of distance. Add to that additional delays from each of the policy/control boxes stacked in the DMZ, as each data packet is opened and inspected for specific issues (e.g., intrusion detection, firewall, APT, and so on).

In part because of its static location, the entire DMZ has become a bottleneck for enterprise users seeking to access cloud services. (And that’s pretty much all users.). Bandwidth, particularly for remote users, becomes an issue. In my neighborhood, 2GB internet access is available via my cable provider. Enterprise users enjoying such fast access from home will quickly eat up limited DMZ hardware-constrained enterprise network bandwidth, further exacerbating contention..

The model doesn’t scale: Data-traffic volume is only going to increase in the future, forcing enterprises reliant on archaic hardware-based security to try to keep up by duplicating stacks and stacks of security appliances. This is true today and will be especially true in the immediate future as 5g rollouts continue along with low orbit satellite based internet (such as Starlink) covering the entire world.

Back to the future: proxy security in the cloud, internet as corporate network

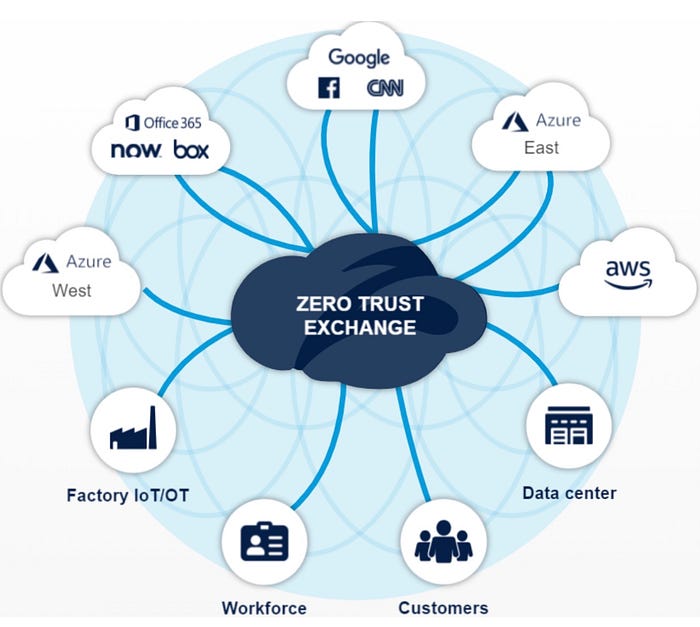

More and more enterprise applications — including custom-developed ones — are cloud-based. It’s possible, practical, and imperative for enterprises today to use the internet as the primary fabric for connecting employees and partners to each other, and to applications…directly. It requires a proxy model for cybersecurity, with distributed security delivered at the cloud edge, close to every user.

The Zscaler Zero Trust Exchange embodies this cloud-based, proxy-security architecture. Zscaler allows you to ensure each site, remote, or home user connects directly to a service (e.g., Salesforce) without having traffic backhauled to a distant DMZ. Latency drops to near-zero (<20ms), improving performance, user experience, and productivity. The cloud model scales, and bandwidth bottlenecks are eliminated.

Under the hood, the internet is a distributed networking platform, basically a BGP-peered network between cloud providers and internet service providers (ISPs). Zscaler peers with both cloud proviers and ISPs directly to take advantage of the internet’s underlying internet exchange infrastructure. Zscaler also intentionally does not run a backbone to avoid having the tempting ability to backhaul and influence peering ratios and save costs. The path an enterprise network connection takes over the internet will be optimized between connection point and cloud service, delivering data over the fastest possible network route and yet still every packet is inspected… Users experience this as a big improvement in the performance and interactivity of applications.

Companies moving to the cloud & becoming digital must re-architect networks.

The way users work and B2B supply chains have shifted to the internet and to cloud based applications (CRM, O365, WorkDay, Coupa). Data traffic patterns have changed significantly over the last twenty years, and will continue to shift as enterprise users embrace the cloud, and enterprises digitize their own supply chains. A cloud-service/internet-centric architecture is no longer optional, but a requirement, as the percentage of cloud traffic grows over time. Such an approach — like the Zscaler architecture — takes advantage of affordable bandwidth at the internet’s edge, cloud-delivered policy-based security, low-latency connection, application-location independence, and reduced costs.

People often ask why I work with Zscaler. I work with Zscaler because it represents the future of network connectivity: direct, fast, secure, and efficient. I first met Jay and Manoj in a restaurant for breakfast when I was CTO at UBS that we ended up writing all over the paper tablecloth about this architecture… It’s a requirement to get to that idealized end state of enabling cloud-based work and delighting enterprise customers/their partners and employees. Zscaler delivers the network I had envisaged after “necessity being the mother of invention immediately after 9/11”, and now anybody can take advantage of it!

Last updates 10/2/2021

Reviewed by ex-Merrill 9/11 network team.